Sometimes it seems like the world of big data is really the world of abundant small and conflicting data sets. The proliferation of data sources has benefits, but it also increases confusion due to the multitude of conflicting data points and analysis. This problem is often compounded when people who don’t understand the data rush to publish their “insights” on it. The errors are simple, but they lead to wrong conclusions. Mobile advertising appears to be a clear victim in this trend. It often seems like there is a lot of data, but little truth.

Sometimes it seems like the world of big data is really the world of abundant small and conflicting data sets. The proliferation of data sources has benefits, but it also increases confusion due to the multitude of conflicting data points and analysis. This problem is often compounded when people who don’t understand the data rush to publish their “insights” on it. The errors are simple, but they lead to wrong conclusions. Mobile advertising appears to be a clear victim in this trend. It often seems like there is a lot of data, but little truth.

Is In-App Advertising More Effective than Mobile Web Advertising?

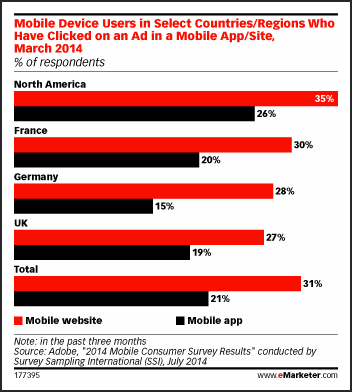

If you read a recent article by eMarketer, you will conclude that Mobile Web advertising is much more effective than in-app advertising. A recent study by Survey Sampling International (SSI) concluded that more than one-third of consumers had clicked on a mobile website ad in the last three months while only 26% had clicked on an in-app mobile ad.

This might lead you to conclude that mobile web gets higher ad conversion. However, a recent study by Medialets concluded just the opposite. The click-through rates (CTR) for in-app mobile ads were more than two times more effective than mobile web ads. Which report should you rely on? Let’s look at another example before tackling this question.

Are Ads More Effective on Smartphones than on Tablets?

The same eMarketer article cited above also refers to a recent Nielsen survey that determined smartphone users were more likely to click on an ad than tablet users by a margin of 43% to 37%. Again, we have a conflict with the Medialets data. Medialets reports that ad CTR for tablets is 44% higher than smartphone handsets.

The same eMarketer article cited above also refers to a recent Nielsen survey that determined smartphone users were more likely to click on an ad than tablet users by a margin of 43% to 37%. Again, we have a conflict with the Medialets data. Medialets reports that ad CTR for tablets is 44% higher than smartphone handsets.

Now it could be that the 37% in the Nielsen study who clicked on ads in tablets clicked on several during the time period and the smartphone users simply clicked on one. There is no distinction in the number of clicks in the time period, simply that they had clicked on one or more ads. However, effectiveness is more about the number of click conversions, so the Nielsen data may be misleading.

Comparing Actual Data vs. Sampled or Reported Data

This brings us to another key point about data. Traditionally, marketers relied on sampling data. It was too inefficient to gather all data because you needed to speak with all consumers to compile it. Nielsen became the king of sampling and everyone knew the data had error, but they hoped the error was either small or consistent enough that over time there was some semblance of accuracy at the trend line. Much of that data was also voluntary response data. It relied on consumers to correctly recollect their behaviors and report them accurately. Consumers can offer insight on what they remember but that doesn’t necessarily reflect what actually happened.

This brings us to another key point about data. Traditionally, marketers relied on sampling data. It was too inefficient to gather all data because you needed to speak with all consumers to compile it. Nielsen became the king of sampling and everyone knew the data had error, but they hoped the error was either small or consistent enough that over time there was some semblance of accuracy at the trend line. Much of that data was also voluntary response data. It relied on consumers to correctly recollect their behaviors and report them accurately. Consumers can offer insight on what they remember but that doesn’t necessarily reflect what actually happened.

We have seen recently how sampling issues can lead to significant errors. In Los Angeles earlier this year, Nielsen admitted that two households had skewed its radio ratings data significantly. There are reportedly 2700 households in Nielsen’s LA radio market sample and only two households created a significant enough skew to withhold the data altogether. This is the problem with small data sets; outliers can have a big impact.

The big change with digitally delivered content is that sampling is not required. Every session is recorded as is every ad served. The publishers know exactly how ads perform, removing sampling error from their data set altogether. This is a key difference between the Medialets and the Nielsen / SSI data presented above. Medialets reported that it was working from a big data set that included more than 300 billion data points over six months, while the other studies referenced data from fewer than 2000 respondents.

Digital Starts from an Assumption of Actual Data

Recently, I was discussing audience measurement with a member of a radio industry council. It quickly became clear that we started from different vantage points. The XAPP viewpoint started with how to present and segment all audio ad conversion data and not interpret a sample of it. We both were interested in better understanding audiences and ad unit performance, but thankfully we have actual behavioral data as a starting point.

Interactive Audio Ads combine radio’s ability to gain consumer attention with the convenience of mobile and layer on voice interactivity to facilitate higher engagement rates. But just as important, every ad served produces detailed, actual performance data. There is no guesswork in measuring how many people were reached or how many converted through audio advertising.

There is clearly value in a variety of research methods and approaches. The Nielsen and SSI data provide useful information, but it is important to dig beneath the surface before committing your ad budget. When viewing multiple sources it can be just as valuable to note what is common as what appears to be in conflict. Nielsen, SSI, Medialets and a variety of other sources seem to agree on one basic point. Mobile advertising conversion rates are measurable, high and improving.

Related Posts

The Data Piles Up – Radio Serves Ultramobile Listeners

Three Faces of Effective Advertising: Motivation, Method & Measurement

Making XAPP Ads Easy for Advertisers to Use – Scalability Part 2